State of the Art Cifar 10 Feedforward Neural Network

Training Feed Frontward Neural Network(FFNN) on GPU — Beginners Guide

Dataset — CIFAR 10

If you are someone who wanted to get started with FFNN (feed frontwards neural networks)but not quite certain which dataset to pick to brainstorm with, so y'all are at the right place. Nosotros come across Neural network implementations in classical automobile learning to deep neural networks. Today, neural networks are used for solving many business organisation problems such every bit sales forecasting, customer inquiry, data validation, and risk management, Let the states start by request couple of primal questions —

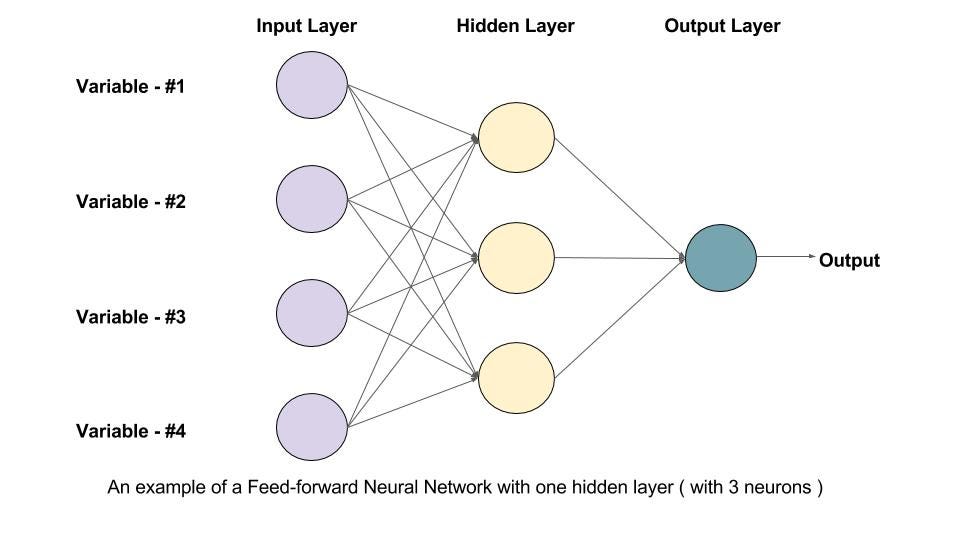

- What is a FFNN ?

A feedforward neural network is an bogus neural network wherein connections between the nodes do not class a cycle. As such, it is different from its descendant: recurrent neural networks. The feedforward neural network was the offset and simplest type of artificial neural network devised. - What are GPU's ?

A GPU (Graphics Processing Unit) is a specialized processor with dedicated retentivity that conventionally perform floating point operations required for rendering graphics. In other words, information technology is a single-fleck processor used for extensive Graphical and Mathematical computations which frees upwards CPU cycles for other jobs. GPU'south has more cores and are much faster than CPU.

Well-nigh Dataset

The CIFAR-10 dataset (Canadian Institute For Advanced Research) is a collection of images that are usually used to train car learning and figurer vision algorithms. Information technology is one of the well-nigh widely used datasets for machine learning research. The CIFAR-10 dataset contains threescore,000 32x32 color images in 10 different classes. The 10 different classes stand for airplanes, cars, birds, cats, deer, dogs, frogs, horses, ships, and trucks. There are six,000 images of each class.

Table of contents

- Introduction

- Data Pre Processing

two.i Loading the required libraries

two.2 Go Data - Exploring the CIFAR dataset

3.1 How many images does the training and testing dataset incorporate?

3.two How many output classes does the dataset contain?

3.3 What is the shape of an image tensor from the dataset?

three.four Can you make up one's mind the number of images belonging to each class? - Preparing the data for grooming

4.1 Splitting into grooming and validation sets

iv.2 Visualizing a batch

4.3 Configuring the model

4.4 Moving the model to GPU - Preparation the model

- Testing with individual images

- Summary

- Future Piece of work

- References

№1: Introduction

The CIFAR-10 dataset contains 60,000 32x32 colour images in 10 dissimilar classes. CIFAR-10 is a set of images that can be used to teach a FFNN how to recognize objects. Since the images in CIFAR-10 are low-resolution (32x32), this dataset can permit researchers to quickly try different algorithms to see what works.

List of classes under the CIFAR ten dataset —

- Airplanes ✈️

- Cars 🚗

- Birds 🐦

- Cats 😺

- Deer 🐆

- Dogs 🐶

- Frogs 🐸

- Horses 🐴

- Ships 🚢

- Trucks 🚚

№two: Information Pre Processing

Loading required libraries

Since we are using PyTorch to build the neural network. I import all the related library in unmarried go.

Get Data

The dataset in bachelor inside the torch vision library. Alternately you tin also access the dataset from Kaggle.

№3: Exploring the CIFAR10 dataset

Q: How many images does the training and testing dataset comprise?

Q: How many output classes does the dataset contain?

Q: What is the shape of an image tensor from the dataset?

Q: Tin yous determine the number of images belonging to each class?

№4: Preparing the information for training

Splitting into training and validation sets

Before the training is started, It is important to split the data into training and testing set.

The dataset is split up into the grooming set of 45000 and validation set of 5000.

We tin now create data loaders to load the data in batches.

Permit's visualize a batch of data using the make_grid helper function from Torchvision.

Visualizing a batch

Can you label all the images by looking at them? Trying to label a random sample of the data manually is a expert style to estimate the difficulty of the problem, and identify errors in labeling, if whatsoever.

Configuring the model

I write an accuracy function that calculate the model accuracy by comparison predicted class and the actual class characterization.

I write an ImageClassificationBase class that contains 4 functions. One role each for training and validation sets which implement the loss and accuracy. The 'validation_epoch_end' combines the losses and accuracy for each epoch and 'epoch_end' prints the 'val_loss' and 'val_acc' at the terminate of each epoch.

Moving the model to GPU

In this section, we will motion our model to GPU.

let usa first bank check if the GPU is available in your current system. If it is bachelor then set the default device to GPU else set it as CPU.

Now i load the training, validation and examination set up to the default device avaliable

Let united states also define a couple of helper functions for plotting the losses & accuracies.

№v: Training the model

We tin brand several attempts at training the model. Each time, try a dissimilar architecture and a dissimilar set of learning rates. Here are some ideas to attempt:

- Increase or decrease the number of subconscious layers

- Increase of subtract the size of each subconscious layer

- Try different activation functions

- Try training for unlike number of epochs

- Endeavour unlike learning rates in every epoch What's the highest validation accurateness yous can get to? Can you get to 50% accuracy? What about 60%?

Without training the val_acc is around 10 per centum. This is random guess and the possibility of predicting the correct form is 1 out of 10 classes.

Let usa start the training at present

I plot the captured results via matplotlib

It's quite clear from the in a higher place picture show that the model probably won't cantankerous the accuracy threshold of 48% even after training for a very long time. Ane possible reason for this is that the learning rate might be also loftier. The model's parameters may be "bouncing" around the optimal set of parameters for the lowest loss. nosotros can attempt reducing the learning rate and training for a few more than epochs to see if it helps.

The more likely reason that the model just isn't powerful enough. Hence to improve the model performance there is a need for a better technique to extract features efficiently from the input images. This can exist obtained using a Convolution neural network.

In my adjacent notebook, I will teach how CNN are implemented and tin be used to improve the model performance.

№6: Testing with individual images

Loading the testing fix from the Torchvision library

Let's ascertain a helper office predict_image, which returns the predicted label for a single image tensor.

Checking the label and predicted values for few samples

Model performance on test set

№7: Summary

Here is the brief summary of the article and step by step process we followed in preparation the FFNN on GPU.

- We briefly learned nigh the feed-forward neural network.

- Downloaded the dataset from Torchvision library.

- We explored the dataset and tried understanding the overall images each grade has, total images in grooming and validation set, etc.

- Data preparation before training

i . Splitting into preparation and validation sets.

two. We visualized a batch of the dataset.

3. Nosotros did the bones model configurations like defining accurateness, evaluation, and fit function. We besides defined the ImageClassificationBase class.

iv. We checked the availability to GPU and moved the dataset to GPU if bachelor else move it to CPU. - We trained the model and achieved an accurateness of about 48 % and ran the same model on the test prepare.

- We randomly checked the model performance by running information technology on the few testing samples

№eight: Time to come Work

- The model operation can time to come exist improved if CNN compages is implemented.

- Try implementing the other deep learning framework Tensorflow.

- Try improving the model operation by updating the number of layers, changing the optimizer and loss function in the FFNN.

№9: References

- You lot tin access and execute the consummate notebook on this link — https://jovian.ai/hargurjeet/cfar-x-dataset-6e9d9

- https://pytorch.org/

- https://pytorch.org/docs/stable/generated/torch.nn.CrossEntropyLoss.html

- https://jovian.ai/learn/deep-learning-with-pytorch-zero-to-gans

I really promise you guys learned something from this post. Experience complimentary to give a 👏if you similar what you learnt. This keeps me motivated.

I will be posting another article within this week on how CNN are implemented and can be used to improve the CIFAR 10 model performance.

Thanks for reading this article. Happy Learning 😃

Source: https://medium.com/mlearning-ai/training-feed-forward-neural-network-ffnn-on-gpu-beginners-guide-2d04254deca9

0 Response to "State of the Art Cifar 10 Feedforward Neural Network"

إرسال تعليق